Data Center GPUs from NVIDIA

Advanced Clustering Technologies incorporates NVIDIA data center GPUs into the HPC systems we build for our customers. NVIDIA data center GPUs offer a powerful combination of scalability, performance, and specialized features that make them highly effective for modern data-intensive applications.

NVIDIA data center GPUs are renowned for several key features that make them highly effective for various data center applications:

High Performance: NVIDIA GPUs, especially the A100 and H100 Tensor Core GPUs, deliver exceptional processing power, which is crucial for handling large-scale computations, training complex AI models, and running high-performance computing (HPC) workloads.

AI and Machine Learning Optimization: These GPUs are optimized for AI and machine learning tasks, with specialized hardware like Tensor Cores that accelerate matrix operations and deep learning algorithms. This optimization significantly speeds up training and inference for neural networks.

Scalability: NVIDIA GPUs are designed to scale efficiently across multiple GPUs, which allows data centers to build powerful systems that can tackle massive datasets and high-demand applications.

Versatility: NVIDIA offers a range of GPUs suited for different tasks within data centers, from general-purpose computing to specialized AI and deep learning applications. This versatility allows organizations to choose GPUs that best fit their specific needs.

NVLink and NVSwitch: NVIDIA’s NVLink and NVSwitch technologies provide high-bandwidth, low-latency communication between GPUs, which enhances multi-GPU setups and allows for efficient data sharing and processing across a cluster.

Software Ecosystem: NVIDIA provides a comprehensive software stack, including CUDA (Compute Unified Device Architecture), cuDNN (CUDA Deep Neural Network library), and TensorRT, which streamline development and optimization for GPU-accelerated applications.

Energy Efficiency: Despite their high performance, NVIDIA GPUs are designed to be energy efficient, which helps data centers manage power consumption and cooling requirements

Yes! I want to hear more about GPUs.

NVIDIA H200NVL

Performance leap

for AI and HPC

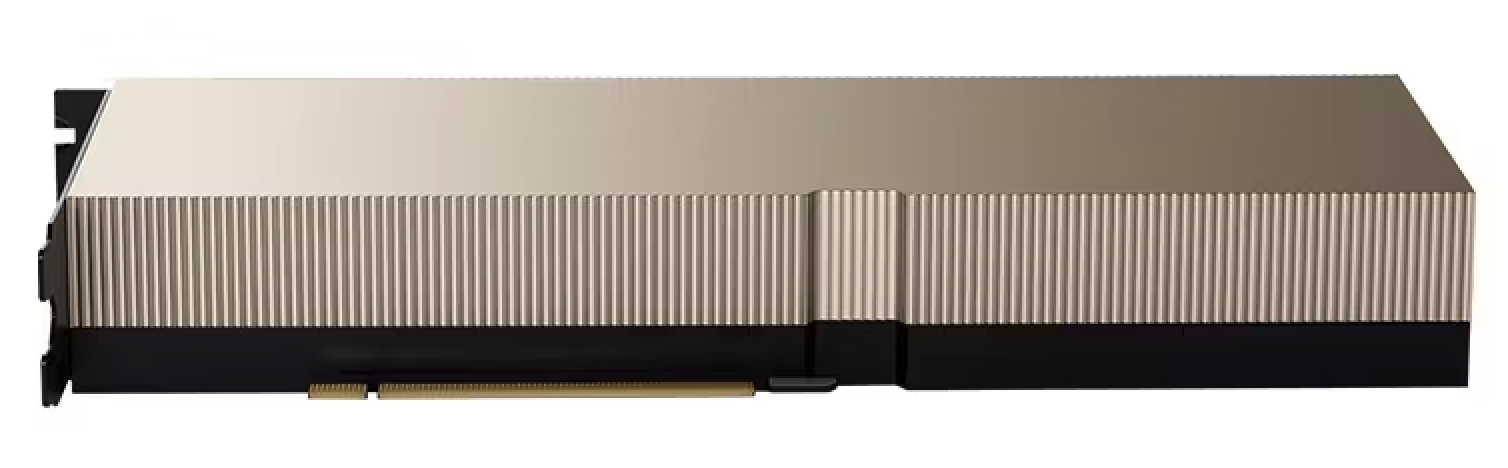

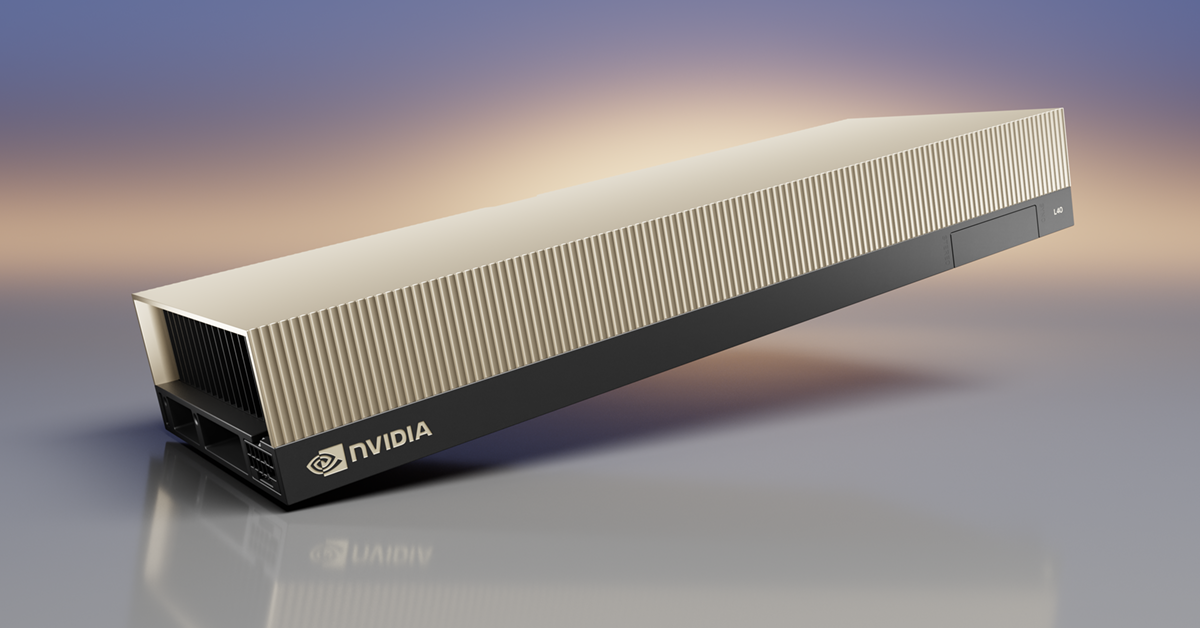

NVIDIA L40S

Offers AI graphics performance

for generative AI

NVIDIA RTX

The world’s first

ray tracing GPU

NVIDIA H200NVL:

The NVIDIA H200 Tensor Core GPU supercharges generative AI and high-performance computing (HPC) workloads with game-changing performance and memory capabilities.

As the first GPU with HBM3e, the H200’s larger and faster memory fuels the acceleration of generative AI and large language models (LLMs) while advancing scientific computing for HPC workloads.

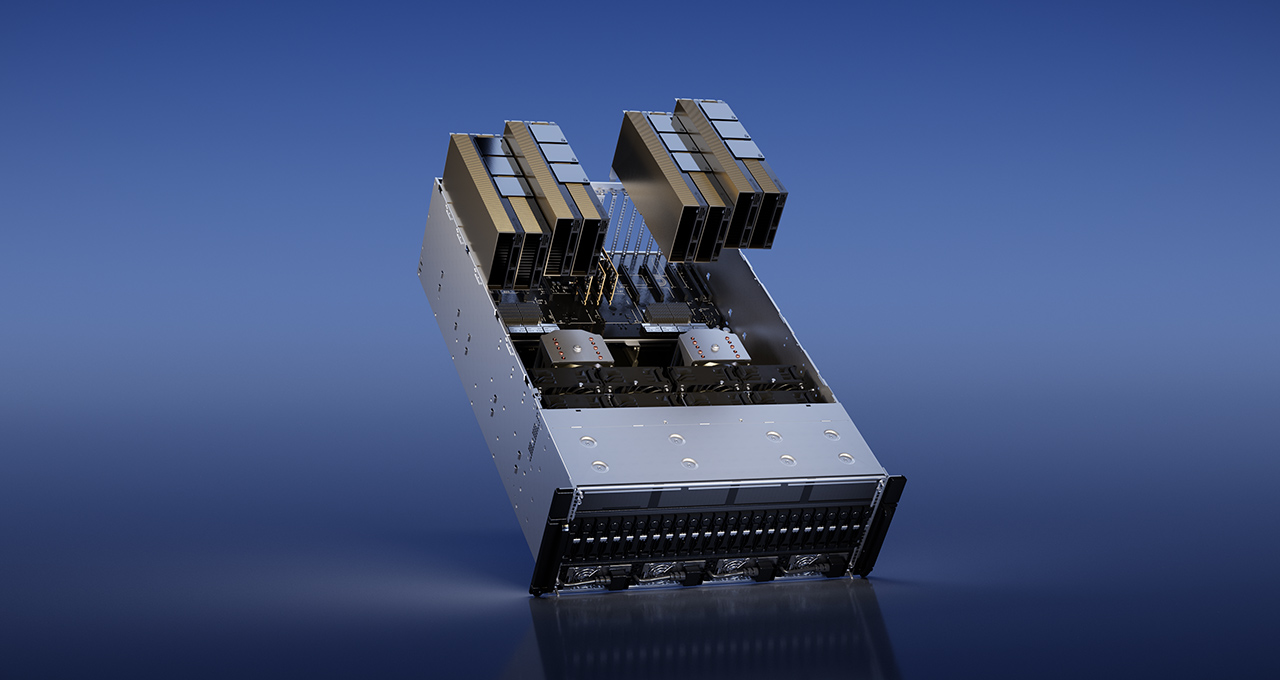

The NVIDIA HGX H200 features the NVIDIA H200 Tensor Core GPU with advanced memory to handle massive amounts of data for generative AI and high-performance computing workloads.

Memory bandwidth is crucial for HPC applications as it enables faster data transfer, reducing complex processing bottlenecks. For memory-intensive HPC applications like simulations, scientific research, and artificial intelligence, the H200’s higher memory bandwidth ensures that data can be accessed and manipulated efficiently, leading up to 110X faster time to results compared to CPUs.

We also offer HGX systems that support both H200 GPUs and B200 and B300 GPUs.

Architecture: Hopper

Memory: 141GB

Memory Bandwidth: 4.8TB/s

Interface: PCi-e Gen 5

FP64: 30 TFLOPs

FP32: 60 TFLOPs

FP16 Tensor Core: 1,671 TFLOPs

INT8 Tensor Core: 3,341 TOPs

Power: 600W

HGX Systems

Model: sys-821ge-tnhr

Form factor: 8U

Allowable GPU types: NVIDIA Certified (H100, H200)

CPU: Intel SP 5th Gen – minimum 6538Y+

DIMMs: 32 – minimum 3TB (H200)

Drive: 15 (3 SATA; 12 NVMe)

PSU: 6x 3000W

Model: as-8125gs-tnhr

Form factor: 8U

Allowable GPU types: NVIDIA Certified (H100)

CPU: AMD 9004 series

*up to 400W; minimum 9454

DIMMs: 24 – minimum 1.5TB

Drive: 18 (2 SATA; 16 NVMe)

PSU: 6x 3000W

HGX B200 Systems

Model: sys-a22ga-nbrt

Form factor: 10U

Quantity of GPUs: 8

CPU: Intel 6 6900 series

DIMMs: 24 – minimum 3TB (H200)

Drive: 10 (NVMe U.2)

PSU: 6x 5250W

Model: AS -A126GS-TNBR

Form factor: 10U

Quantity of GPUs: 8

CPU: AMD 9004/9005 series

DIMMs: 24 – minimum 1.5TB (H200)

Drive: 18 (8 NVMe; 2 SATA)

PSU: 6x 5250W

GPU Computing with the NVIDIA Tesla; Educational discounts are available here

Advanced Clustering Technologies is offering educational discounts on NVIDIA A100 GPU accelerators.

Advanced Clustering Technologies is offering educational discounts on NVIDIA A100 GPU accelerators.

Higher performance with fewer, lightning-fast nodes enables data centers to dramatically increase throughput while also saving money.

Advanced Clustering’s GPU clusters consist of our innovative ACTblade compute blade products and NVIDIA GPUs. Our modular design allows for mixing and matching of GPU and CPU configurations while at the same time preserving precious rack and datacenter space.

Contact us today to learn more about the educational discounts and to determine if your institution qualifies.

NVIDIA, the NVIDIA logo, and are trademarks and/or registered trademarks of NVIDIA Corporation in the U.S. and other countries. Other company and product names may be trademarks of the respective companies with which they are associated. © 2021 NVIDIA Corporation. All rights reserved.

Additional online resources:

GPU Computing Systems for AI and HPC

-

CPU

2x up to 128 core Intel Xeon 6 (Granite Rapids AP)

-

MEMORY

24x DDR5 6400MHz DIMM sockets (Max: 3 TB)

-

STORAGE

24x 2.5″ NVMe drive bays (Max: 1475 TB)

-

ACCELERATORS

Max 8x NVIDIA Tesla, NVIDIA RTX accelerators

-

CONNECTIVITY

Onboard 2x 10Gb NICs & Optional: 10GbE, InfiniBand, OmniPath, 25GbE/100GbE/200GbE

-

DENSITY

5U rackmount chassis with redundant power

-

CPU

2x up to 92 core AMD EPYC (Turin)

-

MEMORY

24x DDR5 6400MHz DIMM sockets (Max: 3 TB)

-

STORAGE

6x 2.5″ SATA,NVMe drive bays (Max: 240 TB)

-

ACCELERATORS

Max 8x NVIDIA Tesla, AMD, NVIDIA RTX accelerators

-

CONNECTIVITY

Onboard 2x 10Gb NICs & Optional: InfiniBand, OmniPath, 25GbE/100GbE/200GbE

-

DENSITY

5U rackmount chassis with redundant power

Ask Us About Our GPU Systems

Advanced Clustering Technologies offers a wide range of GPU solutions. Contact us for more information.

Note about GPU warranties: Manufacturer’s warranty only; Advanced Clustering Technologies does not warranty consumer-grade GPUs.