We integrated our first InfiniBand (IB) cluster back in 2003, and it ended up on the Top500 list of supercomputers. Since then, we’ve deployed hundreds of IB clusters across North America.

We integrated our first InfiniBand (IB) cluster back in 2003, and it ended up on the Top500 list of supercomputers. Since then, we’ve deployed hundreds of IB clusters across North America.

These deployments have allowed us to build a working knowledge on all aspects of the InfiniBand topology to the point where we’re able to utilize this expertise in determining optimum configurations to maximize efficiencies.

With the prevalence of multiple, multi-core processors in server and storage systems, overall platform efficiency as well as CPU and memory utilization depends increasingly on interconnect bandwidth and latency.

We have long-standing relationship with the major InfiniBand vendor, which allow us to offer faster turnaround times, better pricing and outstanding support for your InfiniBand cluster.

Whether you’re buying a new cluster or upgrading an existing one, our engineers will work with you to determine which interconnect and which subscription rate is right for your needs.

From reviewing your application to analyzing your existing hardware configuration and network, we’ll apply our years of experience to configuring, testing, deploying and supporting your InfiniBand network.

Get more information about InfiniBand

InfiniBand Specifications

HCA Adapters

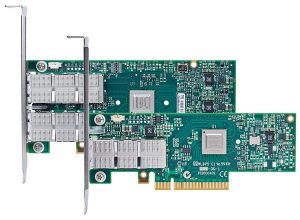

Our Pinnacle compute nodes can ship with either QDR- or FDR-integrated IB adapters (Opteron-based nodes ship with integrated QDR adapters). We also offer the option of discrete single- and dual-port cards. These cards include Mellanox’s Virtual Protocol Interconnect technology, which allows these host channel adapters to function as either IB, 10Gb/s or 40Gb/s interconnects.

QDR

Quad Data Rate

- PCI-e Gen 3.0 x8

- 40Gb/s

- 1 microsecond latency

- 10Gb Ethernet

FDR

Fourteen Data Rate

- PCI-e Gen 3.0 x8

- 56Gb/s

- 1 microsecond latency

- 40Gb Ethernet

EDR

Enhanced Data Rate

- PCI-e Gen 3.0 x16

- 100Gb/s

- Sub 1 microsecond latency

- 100Gb Ethernet

Multiple switch options

We offer many different IB switch configurations, from eight-port standalone switches to 648-port behemoths to using multiple stacking 36-port switches in a fat tree topology (described below). We also offer InfiniBand in different subscription rates, and we’ll work with you to determine which speed is right for you. Rely on our experience in providing optimal solutions to your high performance computing needs.

Cost-effective approach to interconnecting large clusters

Using a fat tree topology is an effective way of holding down costs while not sacrificing bandwidth or latency. The key attribute of this non-blocking topology is that for any given level of the switch topology, the amount of bandwidth connected to the downstream compute nodes is identical to the amount of bandwidth connected to the upstream path used for interconnection. So when you compare the relative inexpensive cost of these 36-port top-of-the-rack switches to the modular chassis that support multiple line cards and spines, the amount of realized savings using this approach is often significant enough to allow for the purchase of additional computational power such as more nodes or better processors.

Subscription rates

With respect to subscription rates, not all applications require high bandwidth. In addition, many applications are limited by the number of processors on which they can run. In both of these instances, setting up an oversubscribed network makes sense, cutting down on the number of switches needed. So instead of a 1:1 non-blocking, full-bandwidth rate, you can consider a 2:1 or 3:1 oversubscribed network if your application warrants it.

Warranty information

Please note that our InfiniBand switches include manufacturer warranties, separate from the warranty service purchased for compute and head nodes. Should an issue arise, Advanced Clustering’s support team will work with you to get the issue resolved. There are multiple warranty options available.

Infiniband cables

Infiniband cables

Maximum cable length distances are a function of maintaining signal integrity and eliminating any crosstalk (noise produced by multiple connections packed together in a dense setting). Signal integrity for high performance computing refers to transmitting high bits of data over longer distances than what a simple conductor could do. If signal integrity is not properly maintained, problems such as card failure or unreliability of performance can occur, and expected speeds may not be realized. And through impedance matching, crosstalk is minimized to ensure the best performance available in our IB cable lineup.

InfiniBand services

InfiniBand can be complicated. Let our team of highly experienced engineers help you configure, install and use your new IB equipment.

Services include:

- Installing your IB switch, cards and cables … so you don’t have to

- System verification … to make sure it all works

- InfiniBand overview … to show you how it works

- Review of parallel jobs … to show you why it works